This article is the second installment in our exploration of the Resilience4j library and its algorithms. Resilience algorithms play a crucial role in enhancing the robustness of applications by preventing failures, handling transient issues, and maintaining system stability. These algorithms, such as Circuit Breaker, Rate Limiter, Bulkhead, and Retry, help mitigate risks associated with network calls, service failures, and unexpected spikes in traffic. By implementing these patterns, developers can build resilient, fault-tolerant applications capable of gracefully handling failures and ensuring a seamless user experience.

In this second part of the overview, I will cover the two remaining patterns: Rate Limiter and Retry.

Rate limiter

The Rate Limiter pattern is designed to control the flow of requests to a system by restricting the number of allowed calls within a specified time window.

Resilience4j provides a RateLimiter that segments time into distinct cycles, measured in nanoseconds from the beginning of the epoch. The length of each cycle is configurable, allowing developers to fine-tune the request rate control according to system requirements. At the start of every cycle, the RateLimiter automatically resets the number of available permissions to a predefined value, ensuring that requests are processed within the allowed threshold. From the caller’s perspective, this process appears seamless, as requests are either allowed or restricted based on the configured rate limits. However, under the hood, the RateLimiter employs optimizations to ensure efficiency, particularly in scenarios where it is not actively used.

Let’s take a look at example configuration of rate limiter

RateLimiterConfig config = RateLimiterConfig.custom()

.limitRefreshPeriod(Duration.ofSeconds(5))

.limitForPeriod(10)

.timeoutDuration(Duration.ofSeconds(50))

.build();Library provides simple configurations, allowing developers to define limits based on time intervals, after setting it config. Let’s make a simple test.

//SOME CODE

CheckedRunnable restrictedCall = RateLimiter

.decorateCheckedRunnable(rateLimiter, Counter::doSomething);

for (int i = 0; i < 100; i++) {

try {

restrictedCall.run();

} catch (Throwable throwable) {

throwable.printStackTrace();

}

}

//SOME CODE

public class Counter {

private static int counter = 0;

public static void doSomething() {

String timestamp =

LocalDateTime.now().format(DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss"));

System.out.println("[" + timestamp + "] Doing something " + counter++);

}

}

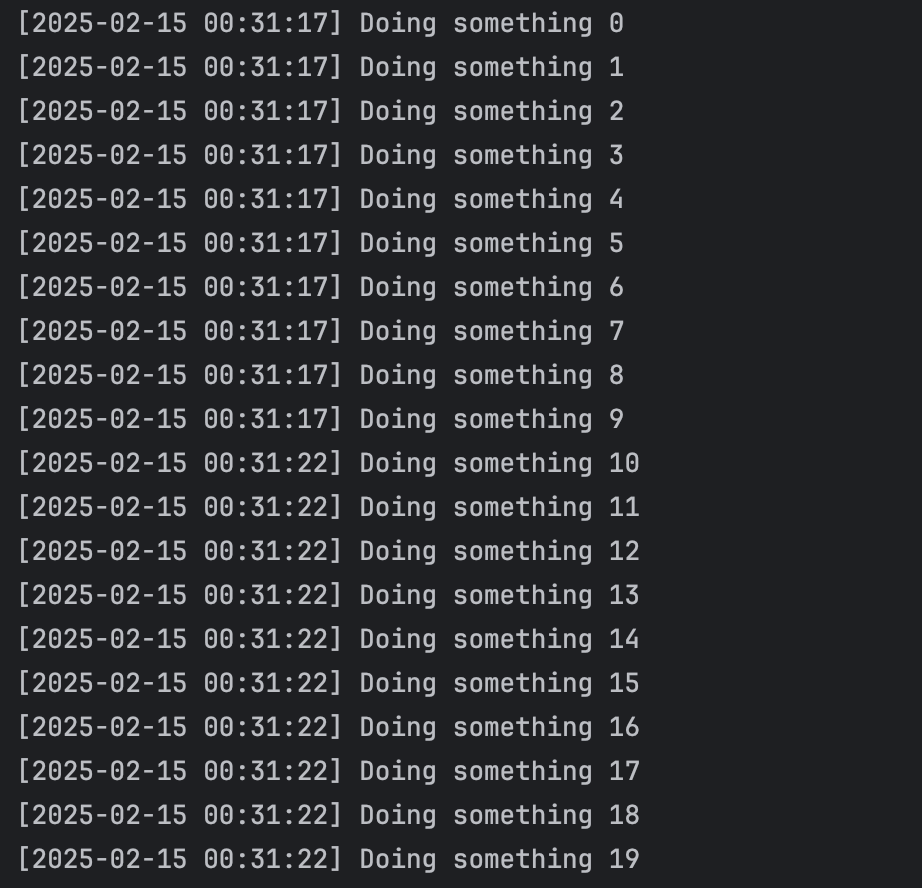

Result is:

The configured resource executes 10 times before enforcing a 5-second waiting period to allow new executions. This mechanism helps control request flow, preventing excessive calls within a short timeframe and ensuring system stability.

This mechanism prevent excessive load on services, ensuring they remain responsive and available under high traffic conditions. By enforcing rate limits, applications can avoid resource exhaustion, protect APIs from abuse, and comply with service-level agreements.

Retry

The Retry Pattern is a key strategy in microservices architecture designed to handle temporary failures in service-to-service communication. In distributed systems, microservices often interact over networks, where issues like timeouts, network instability, or momentary service unavailability can cause request failures. Rather than immediately considering these failures as critical, the Retry Pattern ensures that failed requests are retried a predefined number of times before being classified as permanent failures. This approach helps recover from intermittent disruptions without impacting overall system stability. The pattern is highly configurable, allowing control over the number of retries, waiting time between attempts, and backoff strategies.

Let’s dive into resilience4j library. Just like the other modules, Retry module provides an in-memory retryRegistry which you can use to manage retry instances, and configure it values

RetryConfig config = RetryConfig.custom()

.maxAttempts(3)

.waitDuration(Duration.ofSeconds(2))

.build();Let’s build a dependent service that returns a result when the call number is a prime number, otherwise, it will throw an exception.

@RestController

public class DataController1 {

private static int attempt = 0;

@GetMapping("/data")

public String getData() {

attempt++;

if (!isPrime(attempt)) {

throw new RuntimeException("Service is temporarily unavailable");

}

return "Success from Service B! Count: " + attempt;

}

private boolean isPrime(int n)

{

if (n <= 1)

return false;

if (n == 2 || n == 3)

return true;

if (n % 2 == 0 || n % 3 == 0)

return false;

for (int i = 5; i <= Math.sqrt(n); i = i + 6)

if (n % i == 0 || n % (i + 2) == 0)

return false;

return true;

}

}The implementation of the controller using retry mechanism is as follows:

//SOME CODE

RetryRegistry registry = RetryRegistry.of(config);

Retry retry = registry.retry("dependencyService");

Supplier<String> retryableCall = Retry.decorateSupplier(retry, service::makeCall);

String serviceResponse = retryableCall.get();

System.out.println(serviceResponse);

return serviceResponse;

@org.springframework.stereotype.Service

public class Service {

private final RestTemplate restTemplate = new RestTemplate();

public String makeCall() {

try {

return restTemplate.getForObject("http://dependency-service:8080/data", String.class);

} catch (Exception e) {

System.out.println("Exception occurred");

throw e;

}

}

}

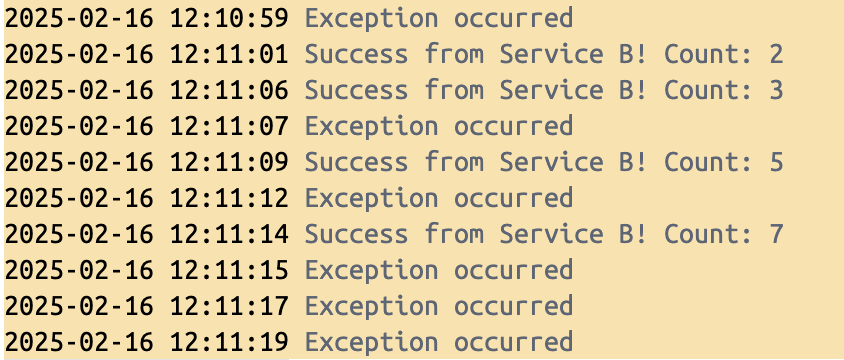

As a result, we can observe that the received responses are prime numbers. However, when the counter reaches 8, 9, and 10, the retry mechanism exhausts its threshold and ultimately throws an exception.

Leave a Reply