Apache Kafka, originally developed by LinkedIn in 2011 and later open-sourced under the Apache Software Foundation, has become a cornerstone of modern data architectures. It was created to address the challenges of handling large volumes of real-time event data efficiently. Before Kafka, companies relied on traditional message queues or batch processing systems, which often struggled with scalability, fault tolerance, and high-throughput data ingestion.

Kafka introduces a publish-subscribe model that allows applications to produce and consume data asynchronously, decoupling services and enabling event-driven architectures. With its durability, horizontal scalability, and ability to handle millions of messages per second, Kafka has transformed how IT systems process data, making it a crucial technology in big data analytics, microservices, and real-time applications.

Publish-subscribe model

In the publish-subscribe (pub-sub) model, events act as messages that are published by producers (publishers) and consumed by subscribers who have expressed interest in specific event types or topics. Here’s how events behave in this model:

- Event Persistence (Optional) – Some pub-sub systems store events for a period, allowing new subscribers to consume past events (e.g., Kafka’s event retention feature)

- Event Creation (Publishing) – A publisher generates an event and sends it to a central broker or event bus. The event typically contains a message payload and metadata, such as a timestamp and event type.

- Event Routing – The event broker determines which subscribers are interested in the event based on predefined topics, channels, or filtering rules.

- Event Delivery (Subscription Handling) – The broker forwards the event to all relevant subscribers. Depending on the system, this can be done in real-time (push-based) or when the subscriber pulls messages from the broker.

- Event Consumption – Subscribers receive the event and process it according to their application logic. This can trigger actions such as updating a database, sending notifications, or triggering another microservice.

Elements of kaffka

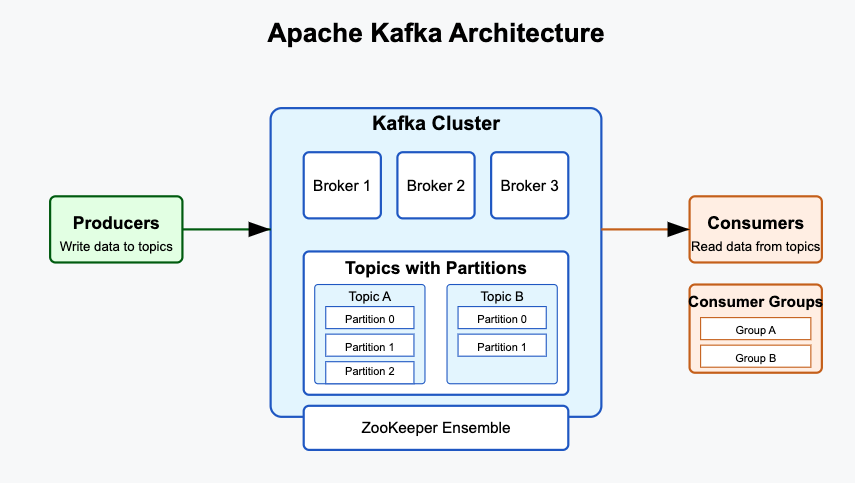

Apache Kafka consists of several core elements that work together:

- Producer – A producer is responsible for publishing messages to Kafka topics. It sends data to the Kafka broker asynchronously, ensuring high throughput and low latency. Producers can also define partitioning strategies to control message distribution across partitions.

- Topic – A topic is a logical category where messages are published. It acts as a feed name to which producers send data and from which consumers read. Topics can be partitioned to allow parallel processing and load balancing.

- Partition – Each topic is divided into multiple partitions, enabling Kafka to distribute data across different brokers. Partitions provide scalability since multiple consumers can read from different partitions in parallel. Messages in a partition are ordered but not across different partitions.

- Broker – A Kafka broker is a server that stores and serves messages. Multiple brokers form a Kafka cluster, ensuring data distribution, replication, and fault tolerance. Brokers handle incoming messages from producers and serve them to consumers.

- Consumer – A consumer reads messages from a Kafka topic. Consumers subscribe to topics and process messages. They can be part of a consumer group, where each consumer reads from different partitions, enabling parallel data processing.

- Consumer Group – A set of consumers that share the load of processing messages from a topic. Each message in a partition is delivered to only one consumer within the group, ensuring efficient parallel consumption.

- ZooKeeper – Kafka relies on Apache ZooKeeper for managing metadata, leader election, and cluster coordination. It helps brokers keep track of topics, partitions, and leader-follower configurations.

Partitioning in a topic is essential for scalability and parallel processing in a distributed messaging system like Apache Kafka. By dividing a topic into multiple partitions, Kafka allows messages to be distributed across different brokers, enabling higher throughput and efficient load balancing. However, when multiple consumers connect to the same partition, only one can consume messages at a time due to Kafka’s consumer group mechanics, ensuring that messages are processed sequentially. Message ordering is crucial in scenarios where the sequence of events impacts the correctness of the application, such as financial transactions, event-driven workflows, or log processing. If messages were consumed out of order in these cases, it could lead to inconsistencies, incorrect state management, or transactional failures, making ordered processing a critical requirement.

ZooKeeper is a centralized service used by Apache Kafka to manage metadata and coordinate distributed components. It plays a crucial role in maintaining information about brokers, topics, partitions, and consumer groups. Metadata in Kafka includes details such as partition assignments, leader-follower relationships, and consumer offsets. This information is necessary for ensuring fault tolerance, load balancing, and seamless recovery in case of failures. Without metadata, Kafka would be unable to track which broker holds specific partitions or determine which consumer should process a given message. ZooKeeper ensures that changes in the Kafka cluster, such as broker failures or topic reassignments, are handled efficiently, maintaining system consistency and high availability.

Leader-follower relationship

In Kafka, the leader-follower relationship ensures high availability and fault tolerance for partitions. Each partition in a topic has a designated leader replica, which handles all read and write operations from producers and consumers. The follower replicas passively replicate data from the leader but do not serve client requests unless the leader fails.

Followers replicate data even when the leader is functioning to maintain data redundancy and ensure quick recovery in case of failure. This replication process allows Kafka to provide high availability—if the leader crashes, one of the followers can be promoted to become the new leader with minimal downtime. Additionally, replication ensures data durability, preventing message loss by maintaining multiple copies across different brokers. Kafka’s ISR (In-Sync Replicas) mechanism guarantees that only up-to-date followers can be elected as leaders, preserving data integrity and preventing clients from reading stale or inconsistent data.

Leave a Reply