A service can fail for numerous reasons, often categorized into infrastructure issues, application-level failures, and external dependencies. By implementing resilience patterns, organizations can mitigate infrastructure failures, application-level issues, and external dependency failures, ensuring stability, fault tolerance, and high availability in modern distributed systems. These patterns are essential for cloud-native architectures, microservices, and high-scale applications.

A service can fail for numerous reasons, often categorized into infrastructure issues, application-level failures, and external dependencies. Here are the most common causes.

Infrastructure failures are underlying system-level issues that impact the availability and performance of services. These failures are often hardware-related, networking problems, or cloud service disruptions.

- Hardware Failures – Physical server crashes, disk failures, memory corruption, or power outages can cause downtime. In cloud environments, this can manifest as virtual machine failures.

- Network Issues – High latency, packet loss, unreliable DNS resolution, or misconfigured firewalls can break communication between services, leading to failed API calls and degraded performance.

- Cloud Provider Outages – Cloud services (AWS, Azure, GCP) occasionally suffer outages, affecting databases, storage, or compute resources that services rely on.Storage Failures – Corrupt file systems, database crashes, or issues with cloud storage (e.g., Amazon S3 downtime) can lead to data loss or inaccessibility.Scaling & Resource Constraints – Inadequate CPU, memory, or network bandwidth allocation can prevent services from functioning correctly under load.

Application-level failures occur due to bugs, configuration issues, inefficient code, or concurrency problems. These failures often manifest as crashes, slow response times, or incorrect processing of requests.

- Bugs & Unhandled Exceptions – Coding errors, unhandled null references, infinite loops, or incorrect logic can cause service crashes.

- Memory Leaks – Poor memory management leads to excessive memory consumption, eventually crashing the application.

- Concurrency & Race Conditions – Poorly synchronized multi-threaded operations can cause inconsistent states, leading to unpredictable behavior.

- Configuration Issues – Incorrect database connection strings, API keys, or environment variables can prevent services from functioning.Service Timeout Issues – When an application takes too long to process a request, dependent services may experience cascading failures.

Modern applications rely on external services, APIs, databases, and third-party integrations. Failures in these dependencies can disrupt critical application functionalities.

- Database Downtime or Slow Queries – If a database is unavailable or has performance bottlenecks, services that depend on it may fail or slow down.

- Third-Party API Failures – If an external API is down or rate-limited, services relying on it (e.g., payment gateways, authentication providers) may fail.

- Caching Failures – When caches like Redis or Memcached fail, the system might experience higher latency as it falls back to database queries.

- Message Queue Failures – If message brokers (Kafka, RabbitMQ) fail, services relying on asynchronous processing may not function correctly.

Resilience patterns including Circuit Breaker, Rate Limiter, Retry, and Bulkhead are essential strategies for building fault-tolerant, distributed systems. Let’s break it down and explore each one individually.

Circuit Breaker

The Circuit Breaker pattern is a crucial resilience mechanism designed to prevent cascading failures in distributed systems. It acts as a safeguard by monitoring the health of a service and temporarily stopping requests when failures exceed a defined threshold. When a service experiences repeated timeouts, errors, or high latency, the circuit breaker “trips”, preventing further calls to the failing component. This allows the system to avoid unnecessary load, giving the service time to recover instead of overwhelming it with repeated failing requests. The circuit remains open for a specific period, after which it transitions to a “half-open” state, allowing limited requests to test if the service has recovered. If successful, the circuit “closes”, resuming normal operations; otherwise, it remains open.

The Circuit Breaker helps mitigate slow responses and timeouts from external dependencies, preventing them from degrading the overall system performance. Additionally, it enhances system stability by detecting failures early, isolating faulty components, and ensuring that transient issues do not escalate into full-scale outages.

Below, I will provide an small example of a Circuit Breaker implementation using Java and Docker.

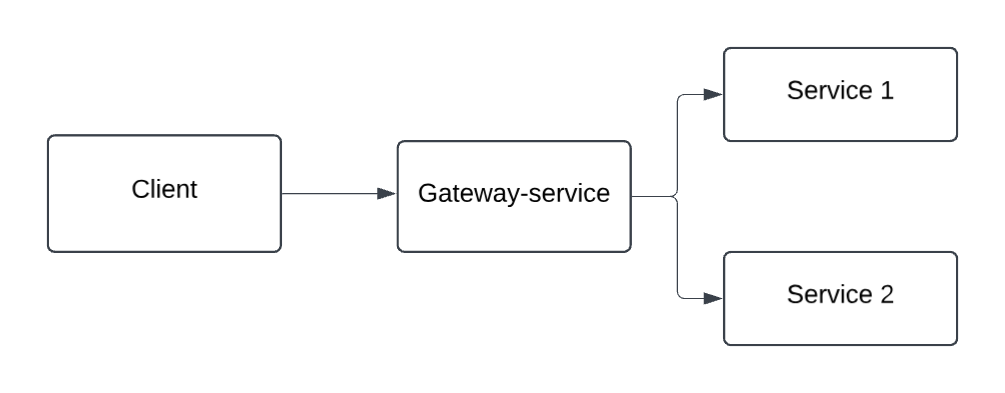

Two dependency services expose APIs for the proxy service to retrieve data, and we will simulate a scenario where one of the services is malfunctioning.

@RestController

public class DataController1 {

private final Random random = new Random();

@GetMapping("/data")

public String getData() {

if (random.nextBoolean()) {

throw new RuntimeException("Service 1 failure");

}

return "Data from Service 1";

}

}@RestController

public class DataController2 {

@GetMapping("/data")

public String getData() {

return "Data from Service 2";

}

}@RestController

public class GatewayController {

private final RestTemplate restTemplate = new RestTemplate();

private final GatewayService gatewayService;

public GatewayController(GatewayService gatewayService) {

this.gatewayService = gatewayService;

}

@GetMapping("/fetch-data")

public String fetchData() {

String service1Response = gatewayService.fetchFromService1(restTemplate);

String service2Response = restTemplate.getForObject("http://data-service-2:8080/data", String.class);

return "Service 1: " + service1Response + " | Service 2: " + service2Response;

}

}

@Service

public class GatewayService {

@CircuitBreaker(name = "dataService1", fallbackMethod = "fallbackService1")

public String fetchFromService1(RestTemplate restTemplate) {

return restTemplate.getForObject("http://data-service-1:8080/data", String.class);

}

public String fallbackService1(Exception e) {

return "Service 1 is unavailable (fallback)";

}

}

I set up a Docker Compose environment with the following structure.

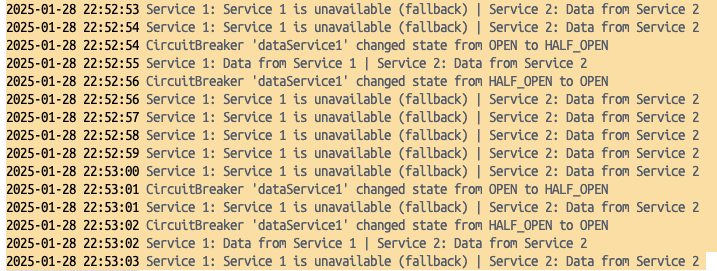

The client container runs a script that continuously makes cURL requests to the proxy service. By examining the logs, we can observe that after exceeding the failure threshold, the Circuit Breaker activates, stopping further requests to Service 1. In the client logs, we can observe failed requests occurring just before the Circuit Breaker transitions to the OPEN state, indicating that the failure threshold has been reached.

Bulkhead

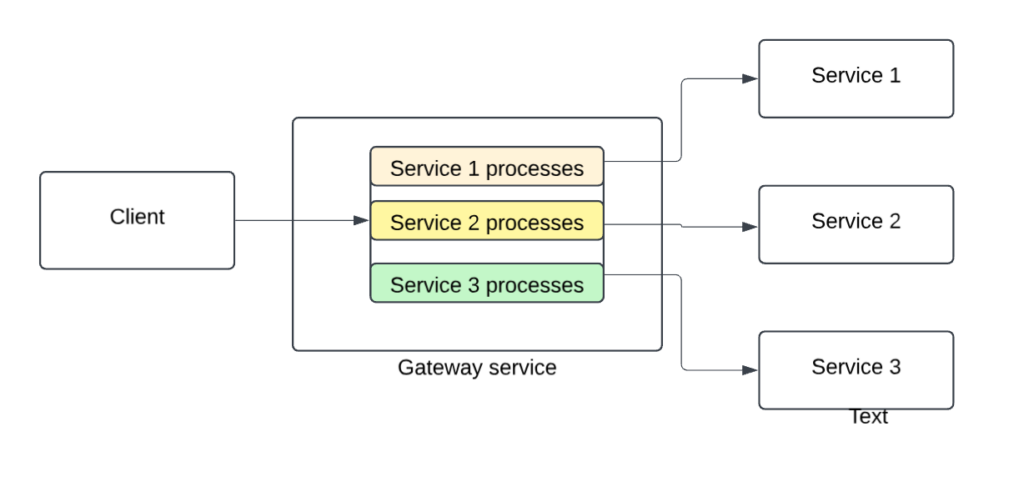

The Bulkhead pattern is another essential resilience strategy in distributed systems. Just as a ship is divided into separate, sealed sections to prevent flooding from sinking , the Bulkhead pattern isolates different parts of a system to ensure localized failures do not cascade and bring down the whole application.

In software systems, the Bulkhead pattern works by allocating separate resource pools (such as threads, memory, or database connections) to different functions, services, or tenants. This segmentation ensures that if one part of the system encounters a failure—such as an overloaded API or a database connection issue—it does not consume all available resources and degrade other critical functions.

By preventing resource exhaustion in one area from affecting the entire system, the Bulkhead pattern enhances fault isolation, improves system stability, and ensures that partial failures do not escalate into total outages. It is especially useful in cloud-based applications where different workloads may have varying levels of importance and traffic patterns.

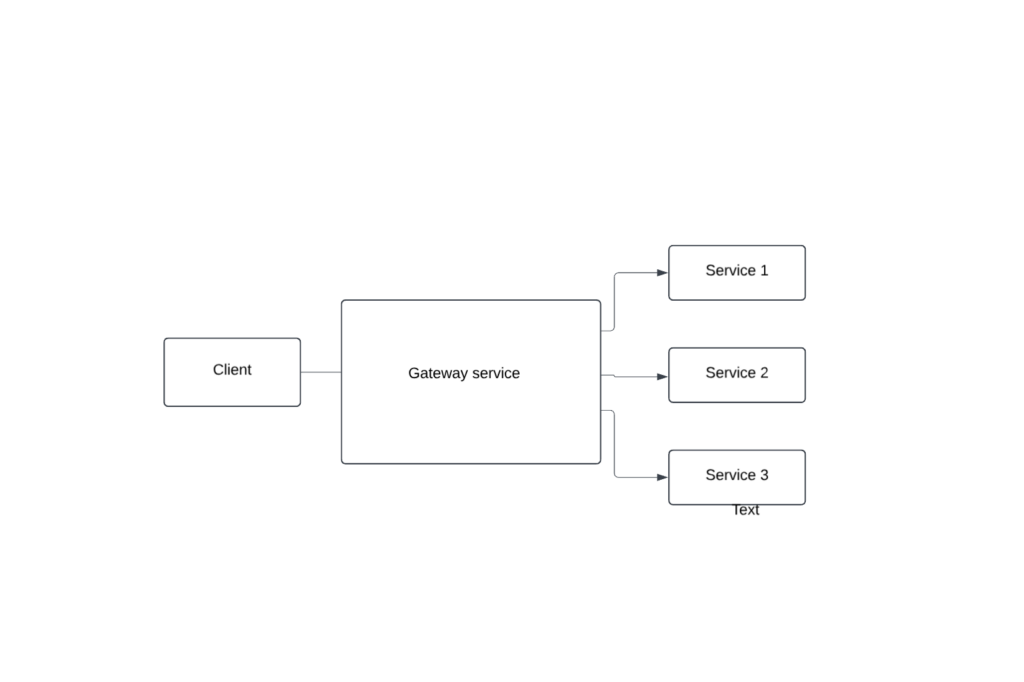

Let’s explore an example of implementation using Java and Docker. The servers providing the API for the gateway service impose a delay before sending a response.

@RestController

public class WorkerServiceController {

@Value("${wait-time-seconds}")

private int waitTimeSeconds;

@GetMapping("/wait")

public String waitAndReturn() throws InterruptedException {

Thread.sleep(waitTimeSeconds * 1000L);

return "Waited for " + waitTimeSeconds + " seconds";

}

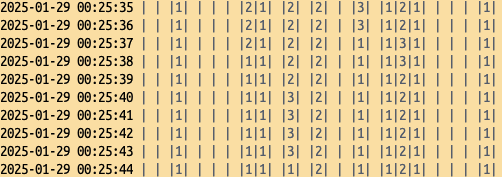

}Consider a scenario where clients are generating a high volume of requests to the gateway service. Over time, the service ends up processing only long-running tasks of type ID 1. As a result, even though a request of type ID 3 typically takes just 2 seconds to complete, it experiences a significant delay and takes 18 seconds to process.

By leveraging the bulkhead pattern, we can allocate dedicated thread pools, ensuring that a portion of the system’s resources always remain available for handling requests to Worker 3. This prevents long-running tasks from monopolizing all threads, ultimately leading to improved response times and better overall performance metrics.

@Autowired

private DependencyService dependencyService;

@Bulkhead(name = "worker1Bulkhead")

@GetMapping("/request-worker-1")

public String requestWorker1() {

return dependencyService.requestWorker1();

}

@Bulkhead(name = "worker2Bulkhead")

@GetMapping("/request-worker-2")

public String requestWorker2() {

return dependencyService.requestWorker2();

}

@GetMapping("/request-worker-3")

@Bulkhead(name = "worker3Bulkhead")

public String requestWorker3() {

return dependencyService.requestWorker3();

}

Leave a Reply